What's a "Nano Banana," anyway?

Please assume that we've already engaged in a thoughtful, in-depth discussion about the many ethical parameters of generative AI. Okay?

"…so, clearly, we're no closer to answering an absolutely essential question: although generative AI tools have the potential to empower hundreds of millions of people to fulfill their creative visions as never before, does this benefit outweigh the sketchy circumstances under which these models were trained, to say nothing of the impact on the environment?"

Great! Now we can play with Gemini's newly upgraded image editing/manipulation model and I can feel a bit less guilty when I say how impressed and pleased I am with it after a day.

The model is called "Nano Banana" and its features are available wherever you're using Gemini (on the web or on the phone app). It's not fully reliable by any means. It still harbors two problems consistently found in AI tools of all kinds:

- It gives you what it thinks you've asked for. Not necessarily what you actually asked for. These two things don't always coincide.

- It sometimes isn't aware that it's wrong. And it happens in ways that frustrate the bejeezus out of me. I don't expect an AI to always be right. I'm just astounded that it can be so confident about such an obviously wrong result.

That said, it's a huge leap toward a Holy Grail: being able to edit images just by describing, in plain English, what you'd like to fix.

(Side note: it's hard to keep up with all of these AI model updates. It's entirely possible that one of these examples shows off an ability that was available in Gemini's pre-Banana era. I make a roundtrip of "What Can (insert name of chatbot/model) Do?" every month or two and all of this seems very new to me, at least at this level of execution.)

Here's a common editing problem:

I couldn't get a clean shot of these gorgeous clouds yesterday because power lines and poles were always somewhere in the way.

Damn and blast. This photo is a fine example of the problem but now that I'm looking at it, I kind of like it. But you know what I'm talking about. The history and timelessness of a beautiful Colonial-era village church is ruined by phone lines cutting across the steeple, etc.

I added this photo to a Gemini chat and then I prompted "Remove all of the power lines and poles." Presto:

I wonder why Gemini chose to add a clump of trees in the lower right corner. It's definitely not on the image in my phone's camera roll. But otherwise, this is spot-on perfect.

Note that there's a Gemini logo in the corner. Gemini also embeds an invisible SynthID digital watermark.

Another example shows off the new model's strengths, as well as a problem about this kind of AI-based editing…even when it works great.

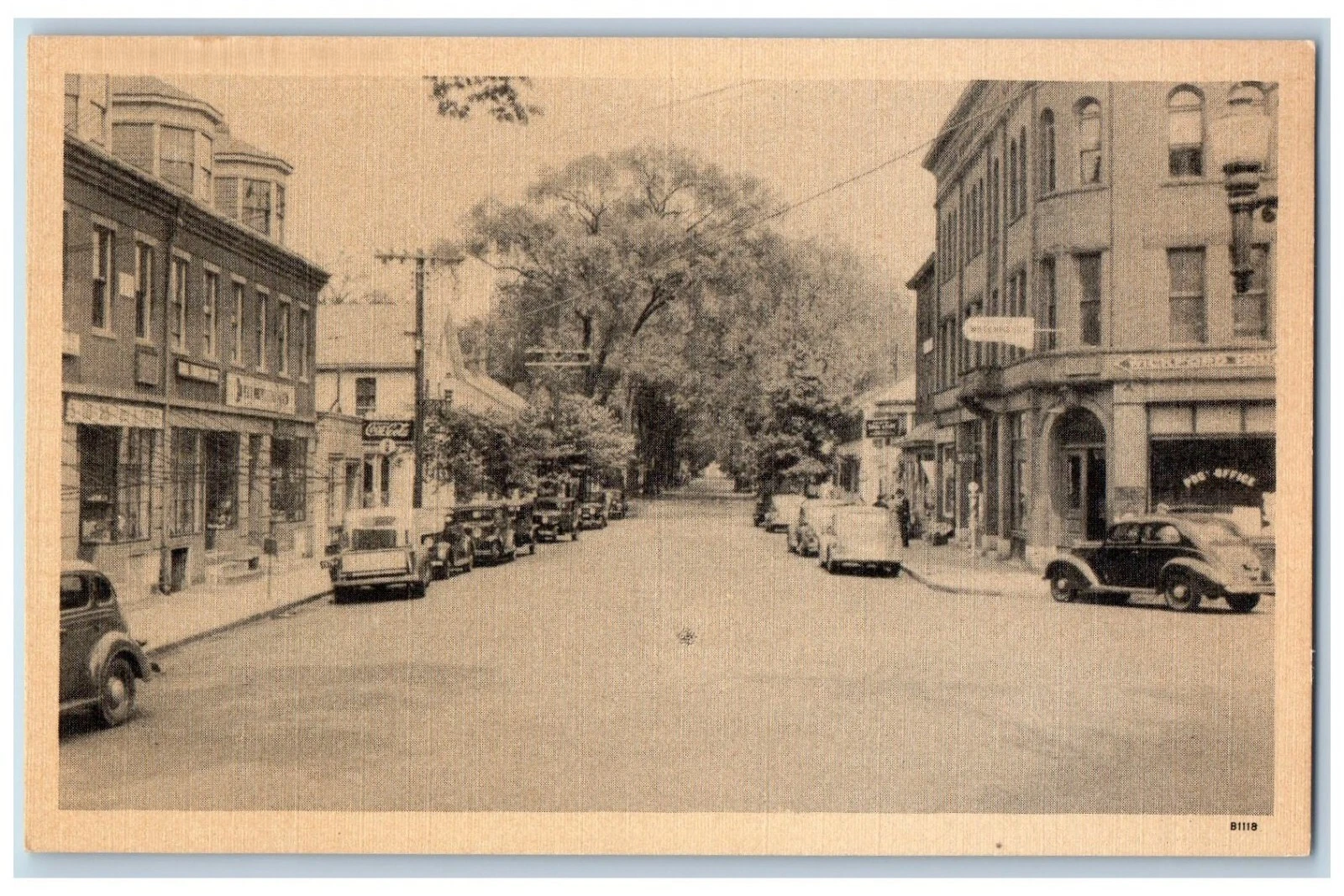

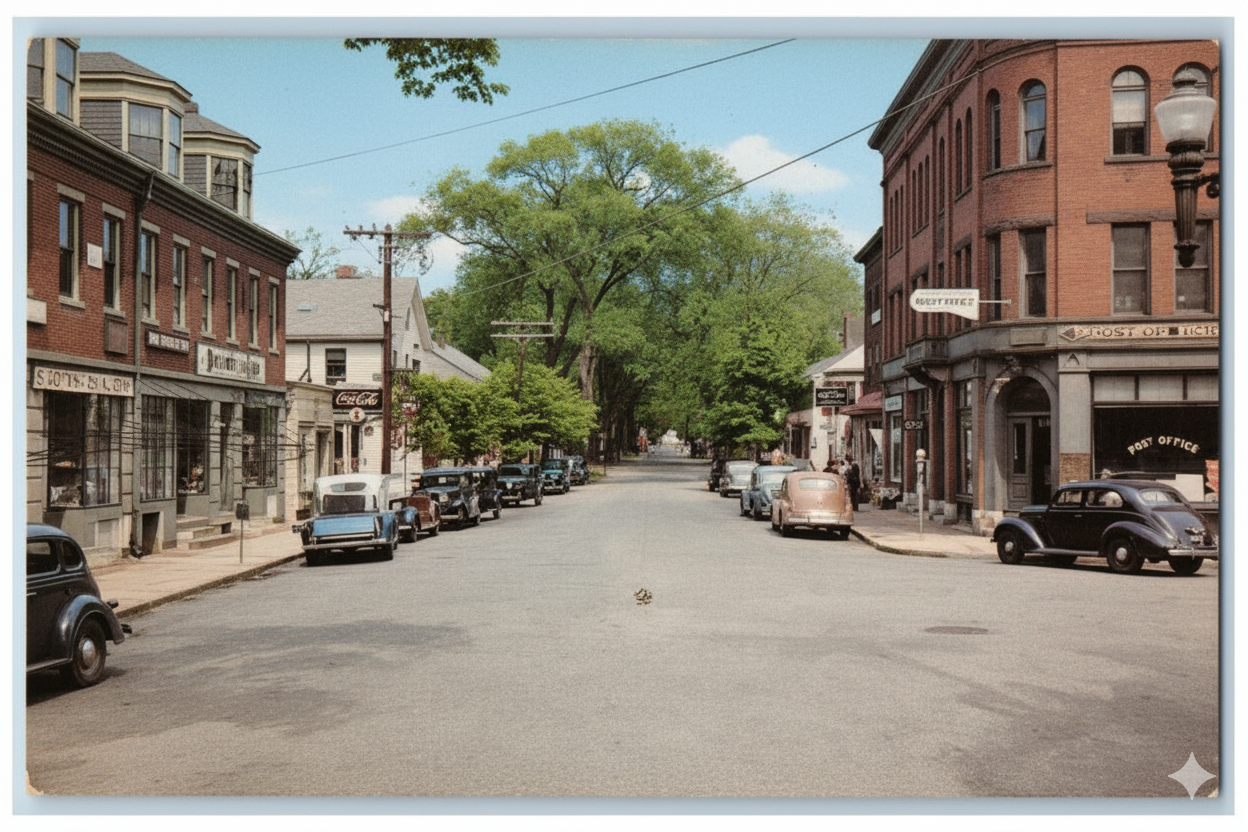

"Can you turn this vintage postcard into a high-resolution color photo?"

That's an awfully good-looking result. It's a lovely colorization job. But it didn't do the second thing: the Gemini-enhanced photo is at the same resolution as the original. This issue will resurface later.

That's not the trouble I was referring to, however. Check out some of the details in the AI-enhanced image:

I'm not surprised that Gemini mangled these two shop signs. They're hard to make out in the original. However! The sign on the left definitely does not read "Post Office" in the original. Gemini seems to have taken a guess, based on the lettering in the store window.

My friend and "Material" podcast co-host Florence Ion has been testing out the new Pixel 10 Pro and discovered something worrisome about the phone's 100x AI-powered digital zoom. The photos look super. But unlike traditional phone-AI photo features that use machine learning when forced to take an informed guess as what's there, the generative AI in the Pixel's super-zoom uses generative AI to generate what it thinks ought to be there.

The result, in one of Flo's example 100x zoom photos, is a ship with what appears to be stylized, vaguely Arabic-script-looking gibberish on the bow in place of the vessel's christened name, "Golden Bear."

It doesn't look like blurry text that's been sharpened by an algorithm driven to an act desperation. It looks like generative-AI fakery. And as a society, we've learned to recognize generative-AI fakery. When we spot that single hair in our soup, we send the whole bowl back to the kitchen. We leave the metaphorical restaurant in a righteous huff, if the manager rebuffs our arguments in favor of a metaphorical free replacement entree and a dessert.

Consequently, Gemini – or any other feature that uses generative AI to edit and fix photos – can throw the integrity of the whole image into doubt. You can snap a photo of the damage that a UPS driver did when they backed into your car, and send it to the insurance people straight out of your camera…but will an AI-tattooed street sign in the deep background enable the driver's insurance carrier to deny the truthiness of the entire photo?

Back to "Fun With Bananas."

Google's blog post about Nano Banana encouraged me to try a lot of super-nutty and nonlinear ideas. But I said at the top that the Holy Grail for AI image editing is simply about making your photos look better. Few people are clamoring to re-do family photos so that everybody's been changed into rainbow raccoons.

(Brain: "Wait, you're just going to move on to the next paragraph? You're not even curious?")

(I sigh. I select a family photo. I tell Gemini "Create a version of this photo in which the all of the people are raccoons with rainbow-colored fur." Gemini responds with an utterly unchanged version of that same photo.)

(I am not surprised. But brain follows this up with "Now try the cast of 'Friends'." I mutter that this is definitely the last time and after this we must get on with what we're trying to write.)

(I interrupt my brain halfway through its protest that I forgot the thing about the rainbow fur, and testily declare that we're moving on.)

I said at the top that the Holy Grail of this kind of AI-powered image editing is to enable people to improve their photos by just making requests in plain language, instead of wading through the Photos app's multiple tabs of adjustment sliders.

Let's give that a try. Here's a photo, without any edits.

It's deeply underexposed and the birdie is also blending into the background, as God or whatever intended. Overall, not a remarkable photo.

And here it is again, edited three times. I did one of them myself, in Photoshop, quickly (less then fifteen minutes). One of them was enhanced by just clicking the Adobe Camera RAW tool's auto-everything button. One of them was enhanced by the new Gemini, using the single basic prompt "Improve the lighting, detail, and color of this photo as best you can."

Without knowing which is which, pick out your favorite. I'm going to have to trust you not to look in the lower-right corner for a Gemini watermark:

My favorite is the one in the middle. It kind of has to be. It's the one I Photoshopped; by definition it's what I want the photo to look like (within the "casual editing" time limit).

Gemini's version is on the left, and "Click 'auto' in Adobe Camera RAW" is on the right. Dang, I'm impressed! It's my second-favorite and it's exactly what I would have hoped for from that prompt. Too bad that it's been downscaled from 20 megapixels to about 1.2 megapixels. But there are reasonable limits to how much number-crunching you should expect from a free AI app.

Almost? When I Photoshopped the image, I made the background a little brighter so that the bird "popped" more.

So I asked Gemini "Can you make the background brighter?" The result was very surprising!

"You were surprised that Gemini brightened the whole image?" you ask. No, and I'm not even sure that Gemini brightened the bird more than it brightened the background.

Here, check out these side-by-side details:

Right?!

I didn't even notice it until I zoomed in close: it's definitely the exact same sparrow (compare the bird's markings in the full side-by-side) but Gemini slightly re-posed its head in this second round of edits!

It would be a big enough surprise on its own. But it's doubly-so because Google has been praising Banana-fied Gemini for its ability to retain details through multiple generations of image edits. I myself have been impressed with this characteristic during these past 24 hours of playing around.

Here's why:

- First, I gave it an Amazon product photo and said "I want the hand to be wearing this golf glove." (Affiliate link because it's not a tech-related item and I'm also a weasel who's eager for Amazon credits).

- Then "Change the asphalt to green grass."

- Followed by "Change the time to 12:34."

After three rounds of changes, the text on the watch case is unchanged, as are the watch icons. Gemini. Gemini even left my arm hair 100% alone.

While I'm at it: I'm pleased that it 100% understood what "change the time" meant. I didn't have to mention that it's on the watch, or what the original numbers were. I didn't have to tell it to match the font and the position.

That said, the new Gemini hasn't earned an A+. Over the past day, it's failed to do some dispiritingly simple things. I added a bird to the sky in that cloud photo. It's OK that Gemini chose to place it off-center (I hadn't specified a position). The subsequent prompt of "Reframe the photo so that the bird is in the center" seemed like a total softball but Gemini responded with an unchanged photo and a proud boast that it had done just what I'd asked.

(Gemini? You can't compare your results to the original and figure out on your own that you obviously didn't do what I asked? I guess this is why Gemini, like many Google apps, has a feedback button right where it's likely to be used.)

Gemini With Nano Banana is a hell of a lot of fun and I wound up spending a lot of time just playing with it. I'm not surprised that it messed up one of my more…fanciful…prompts:

"I want this elephant (photo) to be riding this scooter (photo) down this street (photo)." All right, that was a big ask.

Still: this is an impressive development, overall. And I can expect that Google will continue to improve the image, behind the scenes. I'm looking forward to see if it becomes more reliable and agile over the coming months.

I'm also adding an item to a long list of concerns about the future of photography. We've all been talking to death about the potential for generative AI to be abused by people with bad intentions. We ought to also worry about people introducing undetectable fallacies into their day-to-day snapshots, without even knowing it, just by asking their Photos app to add a little more light to the faces. Or, just by taking the photo with an AI-powered camera at all.

Member discussion